Hack Week December 2017 - Who's This?

In December 2017 the BBC iPlayer on TV teams took part in a hack day. Unlike some hack events there was no specific theme set out; engineers were given free rein to hack away on whatever they felt like. In my previous hack week post from May 2017 I mentioned that I wanted to look into the more niche services available on AWS. This time round I decided I wanted to continue this theme, and so “Who’s This?” was born…

For my hack day I decided to set myself the challenge of building an Amazon X-Ray like equivalent inside BBC iPlayer. I wasn’t going to be able to get feature parity with X-Ray in just one day so I instead focused on an MVP version with one key feature - identifying those who are on screen.

Taking a screenshot

The first stage of the process was to be able to take a screenshot of a video

whilst it is playing; this is relatively straightforward (providing your video

is played from a

video tag

).

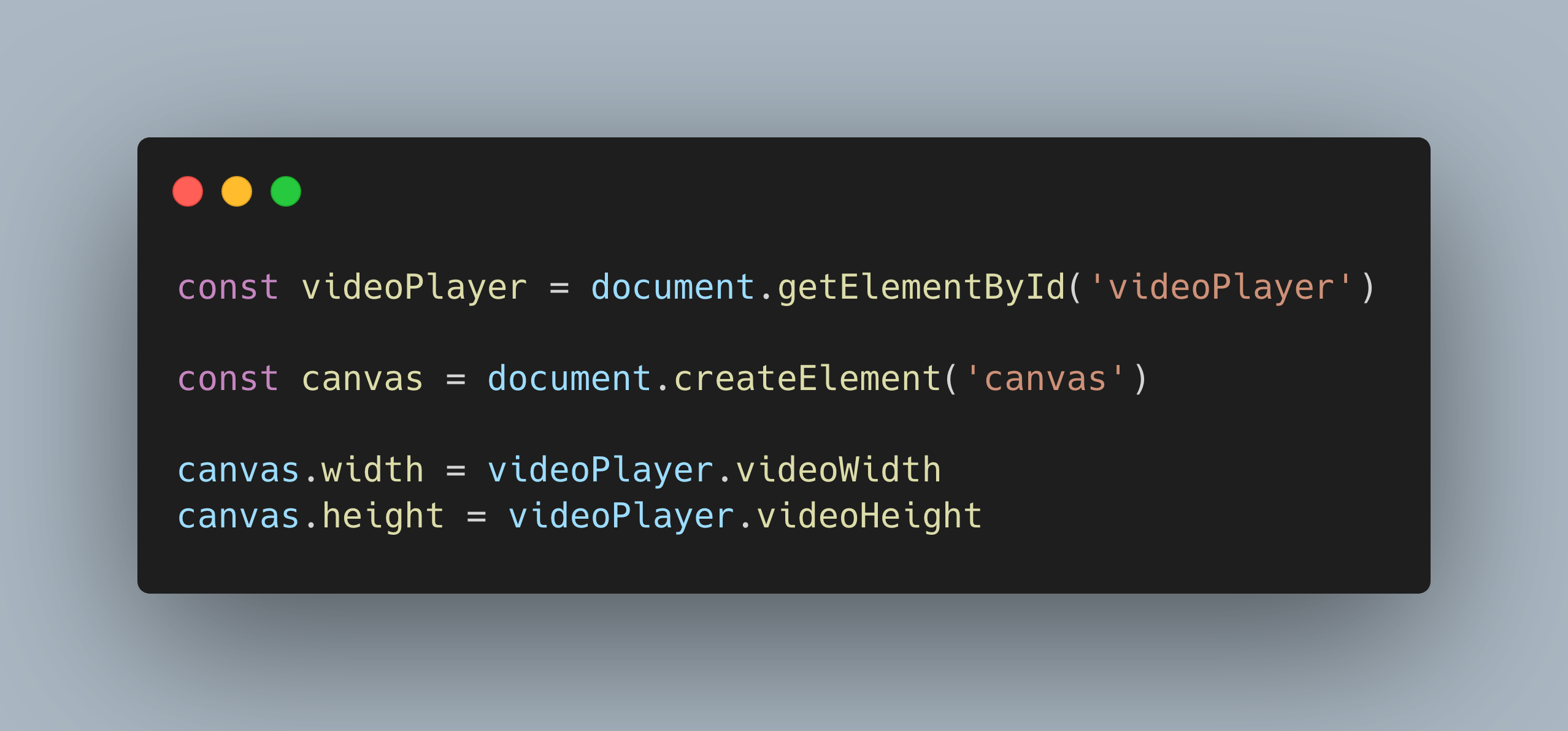

The second step is to create a

canvas

element

that is the same width and height as your video element:

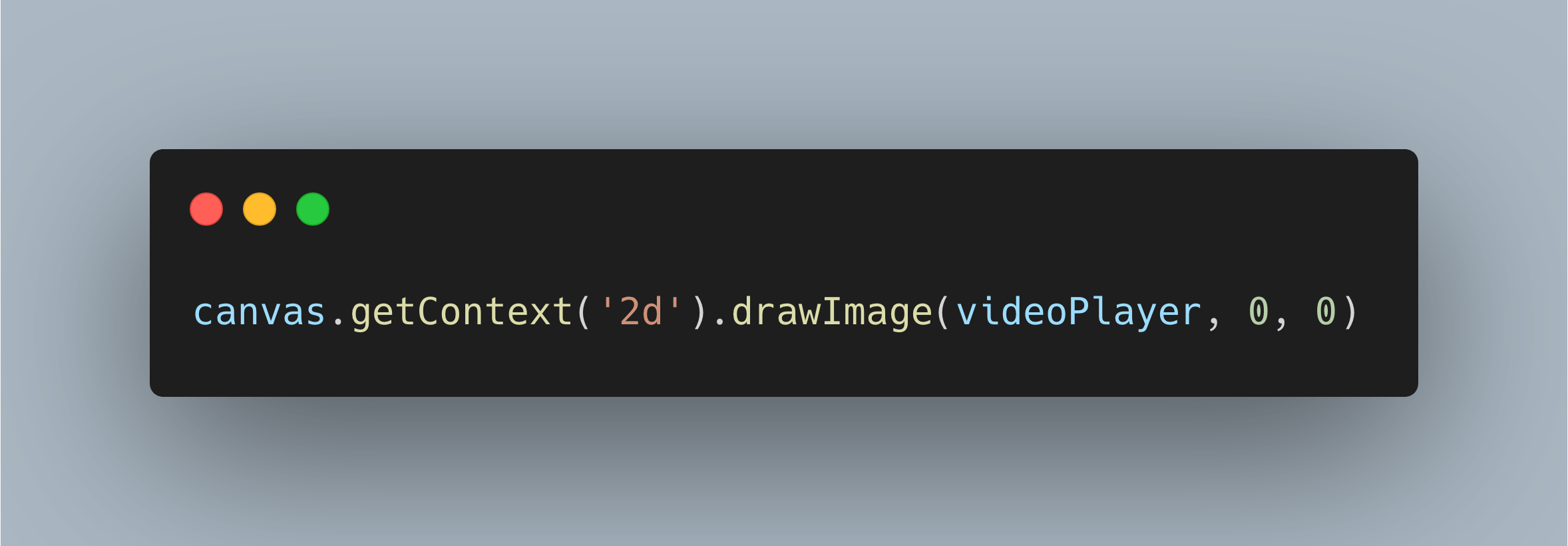

Once this is done you can use the

Canvas 2D API

to draw an image onto the canvas element that was created above.

Note that as we haven’t added the canvas element to the DOM you can’t actually

see any visual indication that a screenshot is being taken.

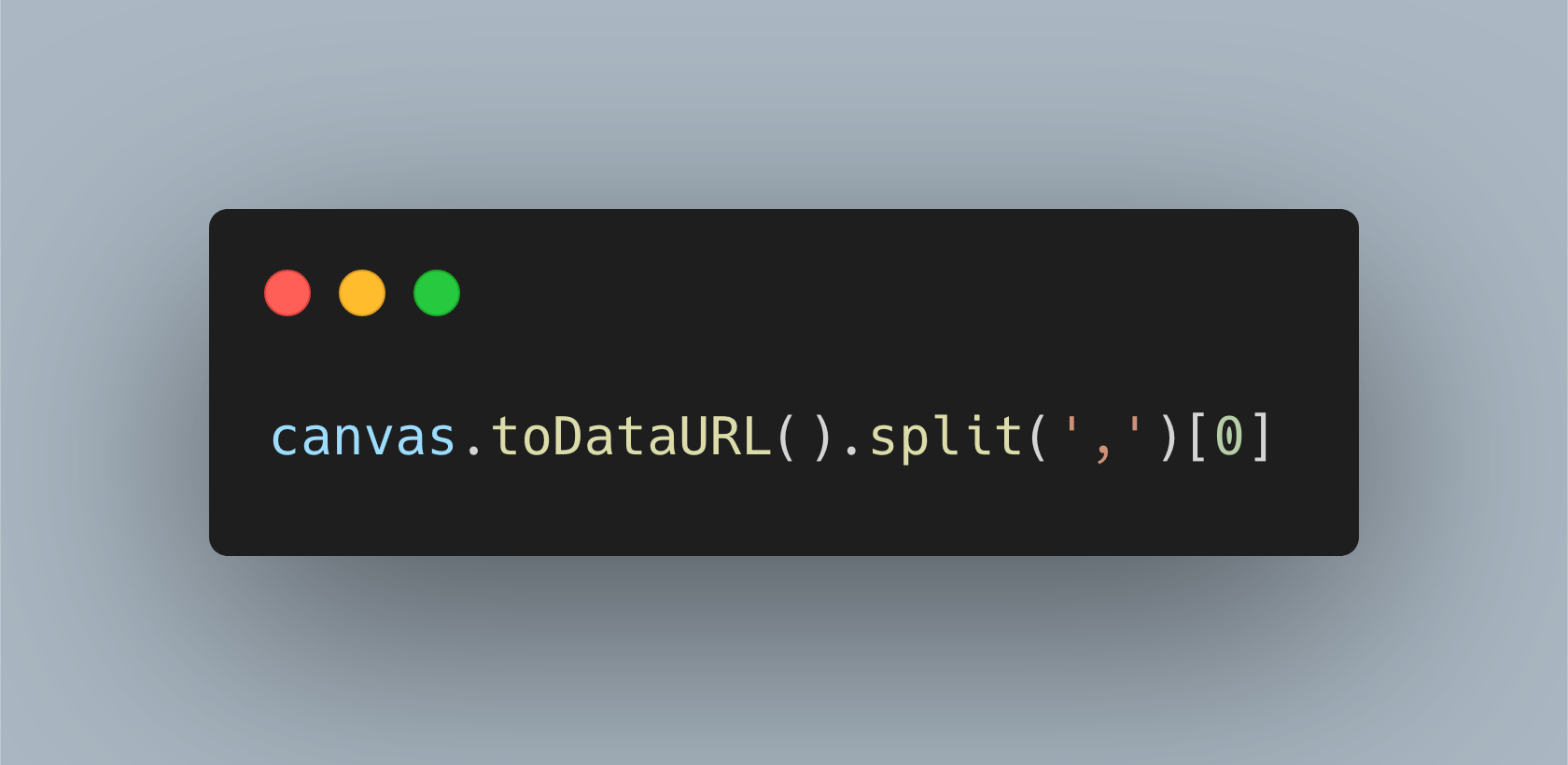

The final step of creating the screenshot is to convert the image into a

data URI

; a data URI in this

context can be thought of as a Base64-encoded image. Luckily this is

simple with the canvas API:

Facial recognition

Now that we have the image, we need to figure out how to identify the people within the image - which is where AWS comes in.

In a previous post I explained how I used AWS Rekognition to compare two images to determine whether or not the same face was in both. AWS Rekognition can also be used to recognise celebrities , which is what we’ll use to recognise who is on screen in our image.

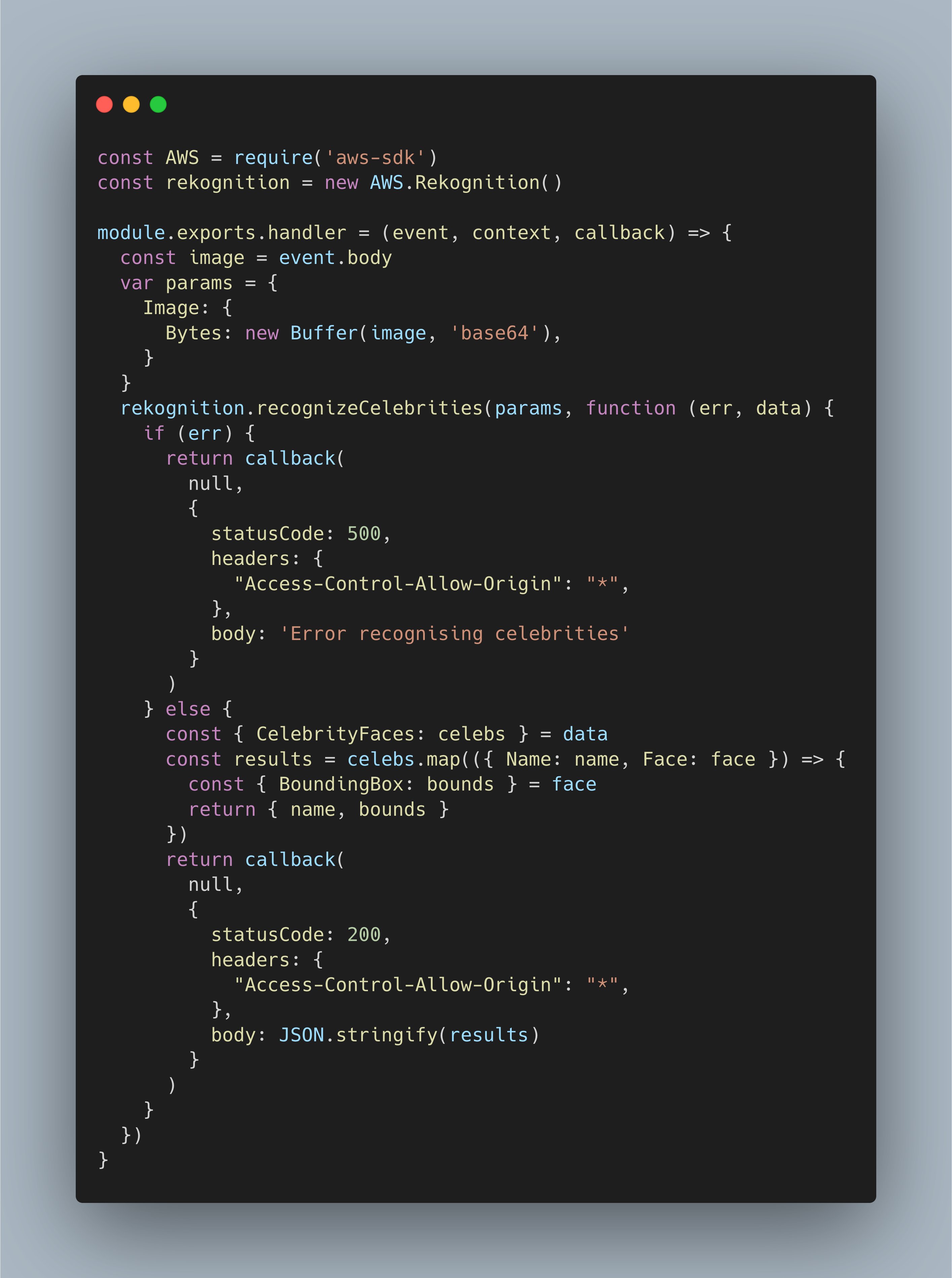

Given that I only had a day to complete my hack I wanted something simple I

could get up and running quickly, so the back-end stack became an AWS Lambda

function fronted by AWS API Gateway. The client sends a POST request

containing the data URI we grabbed in the first step; we can then use the

Rekognition API to pull out names and

bounding box

of the people in the image.

Displaying the results

Now that we’ve got some data about who is in the image (and where their faces are) we can do something on the screen to let the user know. It was getting towards the end of the day at this point though so I opted for a quick-and-very-dirty solution of just drawing a labelled box around each person on screen.